Big numbers and a big goal

What all of this adds up to

If I show you my hand as if to wave at you, like this ✋, and then give you a thumbs up, like this 👍, it’s intuitive for most people the difference between the signs is four fingers. Humans have evolved to become very good at immediately grasping small numbers, which is useful for, say, keeping track of how many predators are hiding in the brush or how many people sitting around a fire still need a plate of food. This ability was first called subitizing by the researcher, E.L. Kaufman in 1949.

It doesn’t take much for most folks to find the limits of our ability to subitize. How many of you can glance at cartons of cherry tomatoes at the store and identify which one has the most tomatoes? There can’t be more than a few dozen in each container, and yet our minds already struggle to process the differences between them. In fact, studies show that beyond four or five subjects, our ability to subitize degrades rapidly unless the subjects are arranged in a pattern or we have a prior familiarity with them.

Our inability to innately grasp larger numbers, sometimes called number numbness, may be hindering our ability to truly comprehend the exponential pace of development taking place in the field of artificial intelligence.

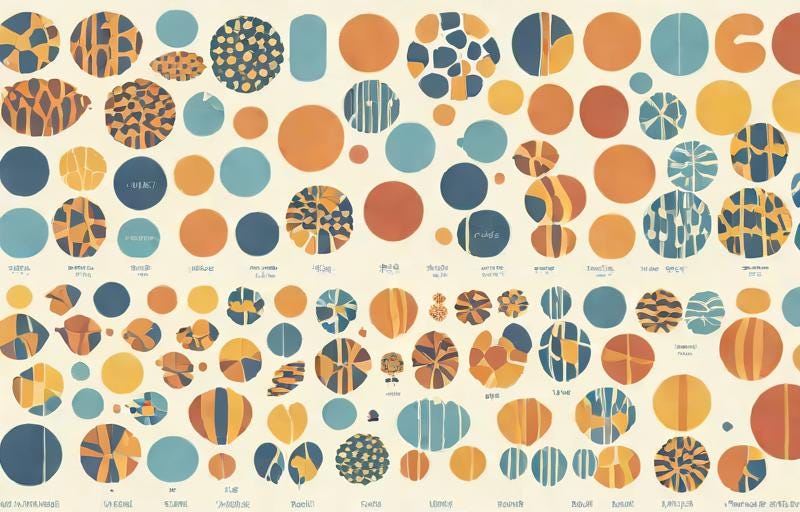

Consider the following figures: The first in the line of now famous GPT large language models (LLM), GPT-1, had 117 million parameters. You can think of a parameter as a point of data measurement during the training of these models. Next, GPT-2 had 1.5 billion parameters, and finally, GPT-3, which is what initially powered ChatGPT when it launched, weighed in at 175 billion parameters.

Of course, we all know these numbers are getting bigger, but the question is really, by how much? It’s really hard to subitize, to feel the difference between such large quantities, which means we are missing the true scale of progress in the GPT series of LLMs.

One way to try to get around number numbness is through substitution. What if instead of parameters, we think about theses figures in terms of seconds of time. 117 million seconds, the same number as parameters in GPT-1, is equivalent to a timespan of about four years, the time between U.S. presidential elections or the World Cup. 1.5 billion seconds is almost 52 years. 175 billion seconds, the same number as parameters in GPT-3, is approximately 6,000 years, a timespan that would stretch back to the late stone or early bronze age in 4,000 BCE.

117 million seconds = ~4 years

1.5 billion seconds = ~52 years

175 billion seconds = ~6,000 years

AI progress is exponential, and while these orders of magnitude can be difficult to discern, it is important to maintain our awareness of how much more powerful this technology is becoming, and how quickly.

It isn’t only the number of parameters that is on a stratospheric rise. The training data sets for frontier AI models are measured in terabytes (1,000 gigabytes) and also rapidly increasing in size. The computational hardware used to training and operate these models, graphics processing units, or GPUs, are often assessed based on the number of mathematical operations they are capable of performing in one second, with the latest models capable of two quadrillion (15 zeroes) calculations per second. Even our substitution trick fails when these great sums grow beyond our concept of time.

In the context of AI, big numbers matter because, for the time being, simply increasing the amount of data (the bytes) and the amount of compute (the calculations and parameters) seems to be enough to give these systems new abilities.

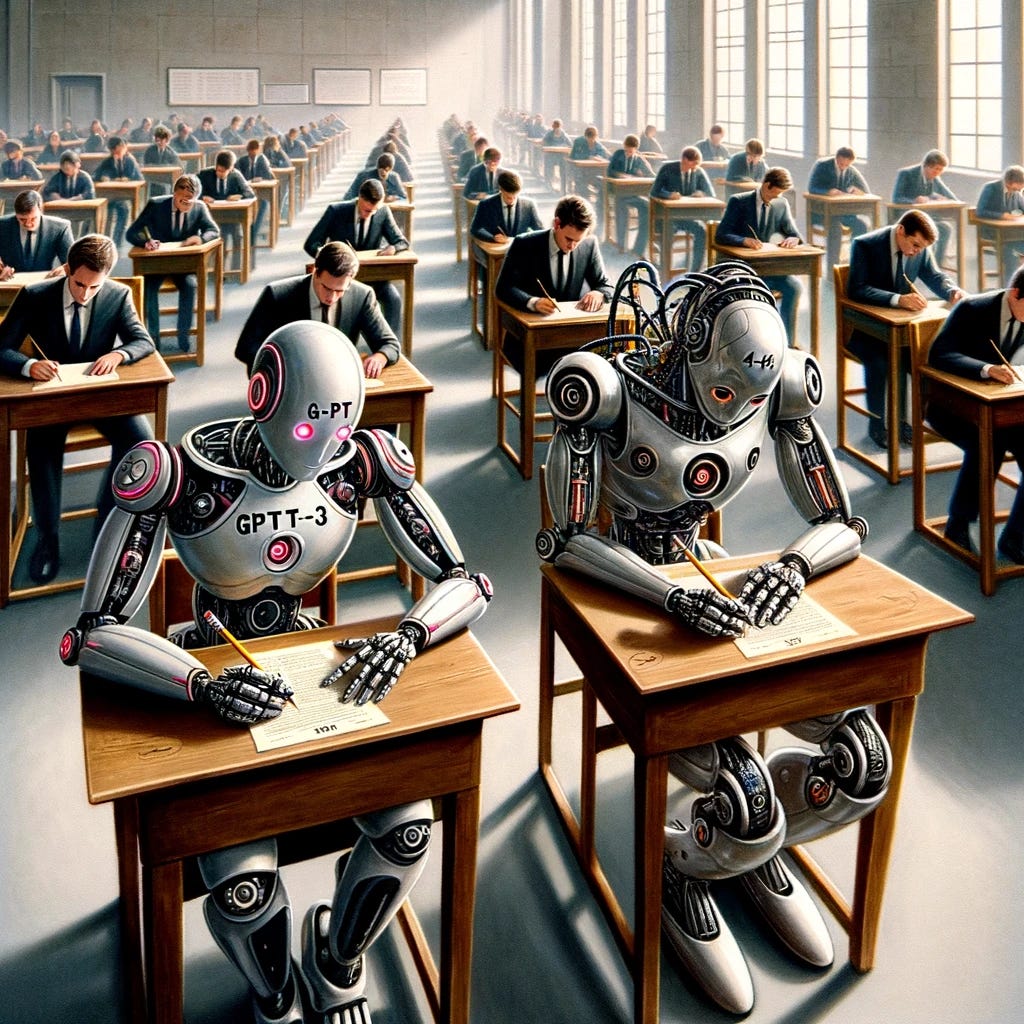

When researchers gave GPT-3 the bar exam and it scored in the 10th percentile relative to human test takers, the engineers didn’t head back to the drawing board to figure out how improve the model’s abilities, they just made it bigger. When GPT-4, whose parameters and training data size have not been revealed by OpenAI but are likely orders of magnitude larger than its predecessor, took the bar exam, it scored in the 90th percentile relative to human law grads. Bigger is better when it comes to these models.

You can bet that if the auto industry, for instance, had figured out that somehow, just by increasing horsepower, cars suddenly gained the ability to fly, or swim, or make dinner at certain thresholds, they would all have behaved just like the big tech firms are now with an all-out pursuit of more. While that’s not how cars work, it is how AI does, at least for now.

We’re all going to hear much more about big numbers in the weeks and months to come. Huge sums of money, gargantuan data sets, and mind-bending processing power. One number small enough to subitize that has my attention is the number six. Six years is how long McKinsey believes it will take for up to 30% of the global workforce to have their jobs displaced by AI. For comparison, peak unemployment during the Great Depression was about 25%.

Over the last 16 months writing The Process, it has been my pleasure to pour myself into each post, and on occasion to hear from readers how my ideas have been received. Writing this newsletter has helped to clarify my thinking and forced me to read deeply into the subjects I write about here. It is my opinion that current efforts to encode and/or legislate our way to human-centric AI are likely insufficient on their own, and given the speed of AI development, the changes predicted by the likes of McKinsey and others may come as unwelcome shocks to the world, if nothing else changes.

I’m not sure it will mean much in the end, but I’ve decided to do something about all this in my own small way — I’m attempting to write a book. I believe there are several avenues of intellectual exploration I want to help open up to wider discussion because doing so may yield wisdom and policy ideas that can increase our chances of realizing the positive potential of AI, technology I continue to believe is marvelous and full of hope.

So, if you notice my posts aren’t coming with their usual frequency, it’s probably because my brain is low on writing juice. However, I do cherish you all and look forward to workshopping ideas here so as to apply them in future chapters of a tome I hope I’ll be able to share soon.

Please, wish me some luck and patience with myself….

With love and more soon,

Philip

Thanks for reading this edition of The Process, a free weekly newsletter and companion podcast discussing the future of technology and philanthropic work. Please subscribe for free, share and comment.

Philip Deng is the CEO of Grantable, a company building AI-powered grant writing software to empower mission-driven organizations to access grant funding they deserve.

Your insights and comments are always interesting. Thank you for writing on this important topic. An observation about the AI-generated images in your article (others will, I'm sure, have noticed this also - it's a pretty common observation): unless otherwise specified, the people in the images are all male-presenting and all white-presenting. It's also assumed that the bronze age person was a) male, b) an older adult, and c) unable or unwilling to wear their hair in a style that required trimming (either on their head or their face. AI has all of the biases of its creators. Another reason to be wary of it.

Thank you for sharing your insights, Philip - and congratulations to you for starting a book! Your writing has taught me, and helped me to understand how AI and nonprofits can work together.