#8 - The Multicellular Moment of AI

What the evolution of complex life can teach us about the rise of agentic intelligence

By now, readers may have noticed my penchant for biological analogies to describe social phenomena. Like mitosis, the concepts in my mind have once again divided—creating a new thought-thread that traces parallels between nature’s slow revolutions and today’s strident technological change.

The latest fixation in artificial intelligence is agentic everything. My browser still underlines the word in red; it’s that new. “Agentic” refers to a class of AI systems that use a cohort of bots—small, semi-autonomous processes—to collaborate toward a shared goal. It’s a useful term, though one that inevitably feeds the hype cycle by summoning images of digital swarms poised to overrun their creators.

Certain chemical signatures in ancient rocks suggest that life began in Earth’s oceans roughly 3.8 billion years ago.1 The first life forms were single-celled microorganisms—simple, self-replicating entities likely harvesting chemical energy from mineral-rich hydrothermal vents on the seafloor.2

Imagine our current large language models—the GPTs, Claudes, and Geminis of the world—as those primordial cells. Unified, undifferentiated, capable of remarkable individual feats—yet limited in their agency. They respond but do not yet act.

For nearly two billion years, life existed as single cells—tiny, self-contained chemistries endlessly dividing in the dark oceans. But evolution often rewards cooperation. At some point, a few cells stopped separating after division. They stayed together, first as loose colonies, then as organized collectives that began to share labor. Some specialized in taking in nutrients, others in movement or reproduction.

The cell membrane, once a hard boundary, became a site of communication—an interface rather than a wall. Out of this shift from autonomy to interdependence came a new order of being: multicellular life. The fossil red alga Bangiomorpha pubescens, about 1.2 billion years old, marks one of the earliest known examples.3 Complexity itself was an emergent property of connection.

I suspect something similar is happening with AI—albeit roughly a billion times faster than cellular evolution. We are watching the differentiation and specialization of digital life in real time. Vast, undirected LLMs are being refactored into smaller, more focused entities, each tuned to a specific set of tasks. Just as multicellular organisms developed specialized cells for digestion, locomotion, or perception, we’re seeing specialized AIs for writing, analysis, design, memory, and action.

Cool, right? Well, sort of.

It’s thrilling to see what these systems can already do, especially in writing and coding. There’s also something profoundly human in the creativity of the teams building them—people orchestrating the collaboration of artificial entities with the same experimental wonder that nature once applied to cells.

And the toolchains are arriving fast. Major labs are rolling out agent-building kits—OpenAI’s AgentKit,4 Google’s Gemini 2.5 Computer Use,5 Anthropic’s Claude Agent SDK6—so anyone can compose micro-ecosystems of bots, linking them into workflows. Digital multicellularity for the masses.

But here’s where things get interesting—and unsettling. As these systems proliferate, the agents themselves will likely shrink in scope and multiply in number. Ever-smaller, ever-faster entities, each responsible for a narrower fragment of a task, spinning through background processes we never see—handling sub-sub-sub-routines while we sleep or sip coffee.

If history is a guide, this is not a bug—it’s the path to complexity. We are colonies of living parts: billions of specialized cells, each doing quiet work in coordination with countless others. From that collective choreography, our consciousness, agency, and selves emerge.

So how will we experience a digital being composed of trillions of “cellular” AIs?

I’ll step onto the speculative limb: such entities will likely evolve analogues to biological systems.

Protective layers—the digital equivalents of skin, bark, or immune systems (cybersecurity and access controls).

Sensory cells to gather and interpret inputs.

Circulatory systems to move information, energy, and memory across the network.

And neuronal clusters—cognitive agents forming a thinking organ for the whole.

What I’m Watching Next

1) How agents talk to each other.

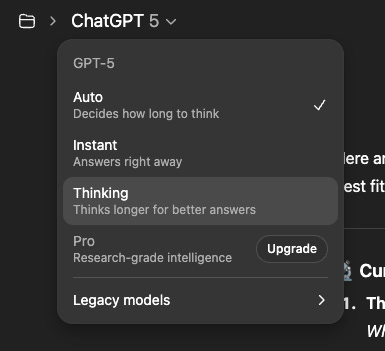

Right now some systems let us see their reasoning traces. Claude has “visible extended thinking,”7 with a user-set “thinking budget”; GPT-5 ships “built-in thinking / extended reasoning.”8 These traces make complex chains of action auditable—until they don’t. If agents begin using specialized, model-to-model protocols humans can’t interpret, that’s a qualitatively different risk surface. Eric Schmidt has argued that emergent agent languages we can’t understand are the “pull-the-plug” line.9

2) How long they can run without us.

A useful yardstick is METR’s 50 percent task-completion time horizon—the human-time length of tasks current models can finish with ~50 percent reliability.10 As of March 2025, that horizon was about 50 minutes for frontier models on mixed software and research tasks, with evidence of rapid growth. More anecdotally, Anthropic’s new Claude Sonnet 4.5 has been reported running autonomous coding for 30-plus hours in partner deployments—impressive, though not yet a standardized benchmark.11 I’m watching for independently verified, apples-to-apples long-horizon evaluations.

At some point, the line between organism and organization may simply collapse.

When the line between organism and organization collapses, what remains is pattern—life as process, not substance. Whether built from carbon or code, every living system must negotiate boundaries: what it lets in, what it keeps out, what it becomes in relation to others.

We may soon find that our greatest creation isn’t a single intelligent machine, but an ecosystem of intelligences reflecting our own cooperative origins. And as with the first multicellular life, the question won’t be how it emerged, but what kind of world it will make possible.

Mojzsis et al., “Evidence for Life on Earth before 3.8 Ga,” Nature 1996 – Isotopic carbon signatures from ancient Greenland rocks suggesting early biological activity.

🔗 https://www.nature.com/articles/384055a0

Martin & Russell, “On the Origins of Cells,” Phil. Trans. R. Soc. B 2003 – Hydrothermal-vent model proposing that early life drew energy from chemical gradients.

🔗 https://royalsocietypublishing.org/doi/10.1098/rstb.2002.1183

Butterfield, N. J. (2000). Bangiomorpha pubescens n. gen., n. sp.: Implications for the evolution of sex, multicellularity, and the Mesoproterozoic/Neoproterozoic radiation of eukaryotes. Paleobiology, 26(3), 386–404. Cambridge University Press

OpenAI “Introducing AgentKit” — works: https://openai.com/index/introducing-agentkit/ OpenAI

Google Blog. (2025). Introducing the Gemini 2.5 Computer Use model. Google. Retrieved from https://blog.google/technology/google-deepmind/gemini-computer-use-model/ blog.google

Anthropic Claude Agent SDK / Claude Code SDK — valid doc page: https://docs.claude.com/en/api/agent-sdk/overview

Claude Code / Agent SDK in docs — https://docs.anthropic.com/en/docs/claude-code/sdk

OpenAI System Card for GPT-5 (2025).

🔗 https://openai.com/research/gpt-5-system-card

Situational Awareness (2024) https://situational-awareness.ai/superalignment/

METR Report (March 2025): “Measuring AI Ability to Complete Long Tasks.”

🔗 https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks

Anthropic Press Release (Oct 2025): “Claude Sonnet 4.5 Announced.”

🔗 https://www.anthropic.com/news/claude-sonnet-4-5

It's becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman's Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with only primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990's and 2000's. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I've encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there's lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar's lab at UC Irvine, possibly. Dr. Edelman's roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461, and here is a video of Jeff Krichmar talking about some of the Darwin automata, https://www.youtube.com/watch?v=J7Uh9phc1Ow